ORCA AI Guardian

Test to PROTECT

Don't let AI leak what you can't afford to lose

As we race to integrate generative AI, some of us are doing so without fully understanding the risks.

Leaked data, biased responses, and compliance breaches can happen quietly and unexpectedly.

ORCA AI Guardian puts you back in control.

It continuously tests, monitors, and enforces your AI policies in real time.

Detecting risks before they become issues, protecting sensitive information,

and making your AI safer, smarter, and fully aligned with your business.

.png)

Acts as a Gatekeeper for Sensitive Data

AI Guardian strips out or sanitises private and sensitive information (PII and secrets) before it reaches commercial AI systems and can restore this information when needed, keeping your data safe at every step.

.png)

.png)

.png)

Runs Thousands of AI Safety Tests

Guardian uses a special system called Menace to run over 10,000 different tests on your AI solutions, looking for problems like mistakes, bias, or people trying to trick your AI.

Finds Risks Before They Become Problems

Guardian acts like a friendly “Whitehat” hacker, checking your chatbots, copilots, or automation tools for weaknesses so you can fix them before anyone else finds them.

.png)

.png)

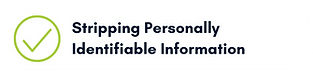

Makes Sure Your AI Follows the Rules

Guardian makes sure your AI is sticking to policy. It checks if your AI is breaking your company’s rules, like sharing secrets, showing bias, or saying things it shouldn’t.

.png)

Builds Your AI Risk Register Automatically

If Guardian finds a risk, it logs it into a central system so you can keep track, fix issues, and show you’re following the rules if anyone asks.

Understands and Keeps Learning

Guardian learns how your company works (policies, language, and workflows) and adapts over time. It helps every AI tool you use follow your unique rules, making it smarter and safer the more you use it.

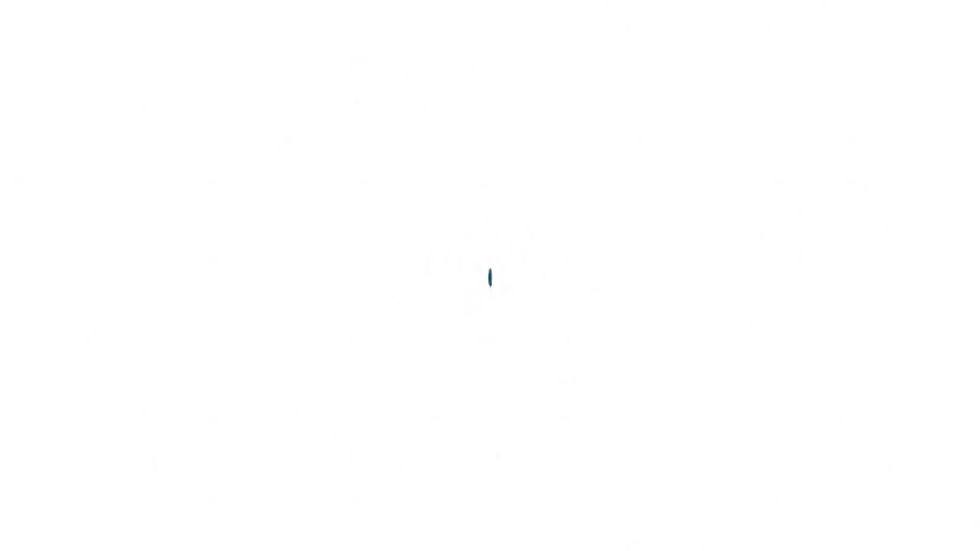

AI Guardian in Action

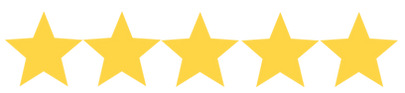

Virtual Veteran Case Study

State Library of Queensland’s “Charlie the Virtual Veteran” chatbot brought WWI history to life,

but rapid success came with unexpected security challenges.

Within 48 hours of launch, over 15,000 user sessions were recorded,

but malicious users exposed vulnerabilities through AI jailbreaks.

ORCA AI Guardian (formerly Red Tie AI) was deployed to secure the experience

without compromising educational value.

With tools like Menace, Warden, and Profiler, ORCA AI Guardian prevented over 470 attacks, enabled intelligent context-based filtering, and preserved the integrity of the AI’s character

turning a reputational risk into an award-winning innovation in secure, public-facing AI.

10,000+ attack scenarios simulated

Using the ORCA AI Guardian Menace tool, over 10,000 red-team simulations identified and remediated 46 attack vectors before launch—securing the AI before it could be exploited again.

15,000+ user sessions in 48 hours

Despite high-volume public use, the system maintained 100% uptime and educational integrity

showing ORCA Guardian’s ability to scale securely without degrading user experience.

476 attacks prevented

After integration, ORCA AI Guardian proactively blocked 476 real-world threats, including 76 in the first four weeks, proving real-time defence at scale.

"We wouldn't recommend any organisation deploying a public or internal-facing AI system without implementing robust safeguard measures, such as the ORCA AI Guardian. Based on our experience with Virtual Veterans, the risks of unfiltered AI interactions are simply too significant to ignore. Having proper content monitoring and filtering systems in place isn't just a best practice, it's essential for responsible AI deployment in educational and public-facing environments."

Anna Raunik, State Library of Queensland